Recent Posts (all)

Lately, I’ve been playing around with helix, a relatively new modal editor.

One of the things I was missing was an LSP for Grammarly (the only reason I can put the commas where they belong).

After struggling a bit with the configuration, I’ve finally found what works

[[language]]

name = "markdown"

auto-format = false

file-types = [ "markdown", "md", "mdown", "txt" ]

language-servers = [ "grammarly" ]

[language-server.grammarly]

command = "grammarly-languageserver"

args = ["--stdio"]

config = { clientId = "client_BaDkMgx4X19X9UxxYRCXZo"}

Note that:

- You need to install the Grammarly language server before.

clientId is not a secret and is the same for all installations.

By contrast the illiberal left put their own power at the centre of things, because they are sure real progress is possible only after they have first seen to it that racial, sexual and other hierarchies are dismantled.

I’ve missed this article when it came out. What an astute analysis.

I’ve been bitten by this bug since getting a new Apple Watch.

Unfortunately, I had to revert to resetting all my location and privacy data on my iPhone, meaning I’m now continuously prompted by iOS if I give permission to all my apps for location, camera, bluetooth, and what not.

I wished I had waited, though. watchOS 10.1 fixes this bug.

While reading Scaling People, specifically this passage:

“The conversation then turned to how the person might mitigate these patterns in future meetings, which was the conversation we needed to have so they could thrive at work.”

I thought how—at least in the environment around me—people seem much more focused on improving at their job instead of improving at home—what kind of partner and parent they are.

Why is that? The most obvious answer, to me, is that improving at work has effects you can literally bank on: being promoted and getting a raise.

Improving at home has benefits as well, but they’re mostly long-term and could be seen as boring: you get stable and fulfilling relationship for the long run, things you will cherish the most when you’re old rather than cherish them now. Although they don’t hurt in the present, their absence can be mitigated by other things: it doesn’t go well with your partner, you can get another while you’re young. Your son doesn’t speak to you, you can speak to your colleagues, who share a silent offspring with you.

But when you’re old, you don’t get a new partner that easily. You’ll hardly get someone to speak to if your children park you in a hospice.

How can we put more emphasis into improving our long-term outlook of a fulfilling life?

Very happy to share that I’ve passed the Professional Communication: Business Writing and Storytelling course by The Economist, obtaining a final grade of 87%.

What are the 7 key takeaways that fit in a short post?

- Think about the briefing: what’s the main message, who’s the audience, what’s the medium

- Then think about the key points you want to make to get your message across

- Order your key points on a map to form a journey. Is the path from one point to the next logical? Are there logical gaps?

- Write the intro — a catchy sentence or two that invites the reader to take the journey — and the executive summary — ideally covering most key points

- Then put some evidence or arguments beside each point

- At this moment, you should have a solid enough structure and text that you can produce a first draft

- Once the draft is ready, polish, polish, polish.

- [Bonus] Grammarly is an excellent tool for non-native English speakers. For example, I use commas the Italian way — it just sounds right to me — and apparently, some actual rules dictate how to use them in English.

You can enroll on their site.

Why super-strict classrooms are in vogue in Britain

Superb article by the Economist on the success of super-strict classrooms in Britain.

The fascinating points:

- Pupils work hard, and they’re not only OK with it, but it motivates them to aim high

- A strict classroom helps pupils from poorer families as children perceive discipline as safety and a promise of success (safety that they might lack at home or in other schools)

- Speaking of success, the school weaponizes praise, and children strive for merit points. Competition is not only biologically embedded but also raises everyone’s grades as the school is among the best in Britain.

- Poetry is embedded in the school, with lunchtime poetry recitals:

A young teacher stands at the front and shouts the first word of each line; the pupils respond with the rest.

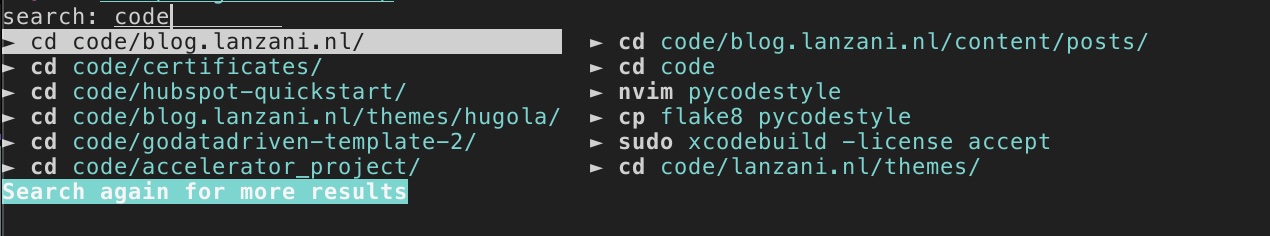

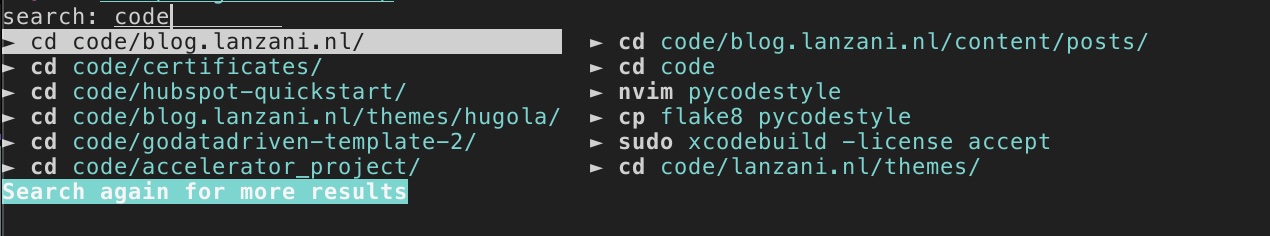

Amazing new release of fish adding Control - R to open the command history in the pager.

It’s fully searchable and syntax-highlighted!

Get it here.

If you have 2 hours to spare, read Nietzsche and the Nazis by Stephen Hicks.

Fantastic, to-the-point, book about the philosophical ideals of the National Socialists.

To quote just a single passage:

History has taught us that the philosophy and ideals the Nazis stood for were and are false and terribly destructive, but we do not do ourselves any favors by writing the Nazis off as madmen or as an historical oddity that will never happen again. The Nazis stood for philosophical and political principles that appealed to millions—that attracted some of the best minds of their generation—and that still command the minds and hearts of people in all parts of the world.

And that means we must face the National Socialists’ philosophical and political ideals for what they actually are—we must understand them, know where they came from, and what intellectual and emotional power they have. Then and only then are we in a position to defeat them. We will be able to defeat them because we will understand their power and we will have more powerful arguments with which to fight back.

Beautiful quote from Frans de Waal’s Are We Smart Enough to Know How Smart Animals Are? book:

“When I began observing the world’s largest chimpanzee colony, at Burgers’ Zoo in 1975, I had no idea that I’d be working with this species for the rest of my life. Just so, as I sat on a wooden stool watching primates on a forested island for an estimated ten thousand hours, I had no idea that I’d never again enjoy that luxury. Nor did I realize that I would develop an interest in power relations. In those days, university students were firmly antiestablishment, and I had the shoulder-long hair to prove it. We considered ambition ridiculous and power evil. My observations of the chimps, however, made me question the idea that hierarchies were merely cultural institutions, a product of socialization, something we could wipe out at any moment. They seemed more ingrained. I had no trouble detecting the same tendencies in even the most hippielike organizations. They were generally run by young men who mocked authority and preached egalitarianism yet had no qualms about ordering everyone else around and stealing their comrades’ girlfriends. It wasn’t the chimps who were odd, but the humans who seemed dishonest. Political leaders have a habit of concealing their power motives behind[…]”

Pretty Clean

A small utility to — unobtrusively — scan your macOS disk to remove caches and other files and folder that can be clean up.

Free, and built on Tauri.

There’s an Hacker News discussion as well in case you want to know more.

←

3/11

→